What if computation isn’t about FLOPS at all?

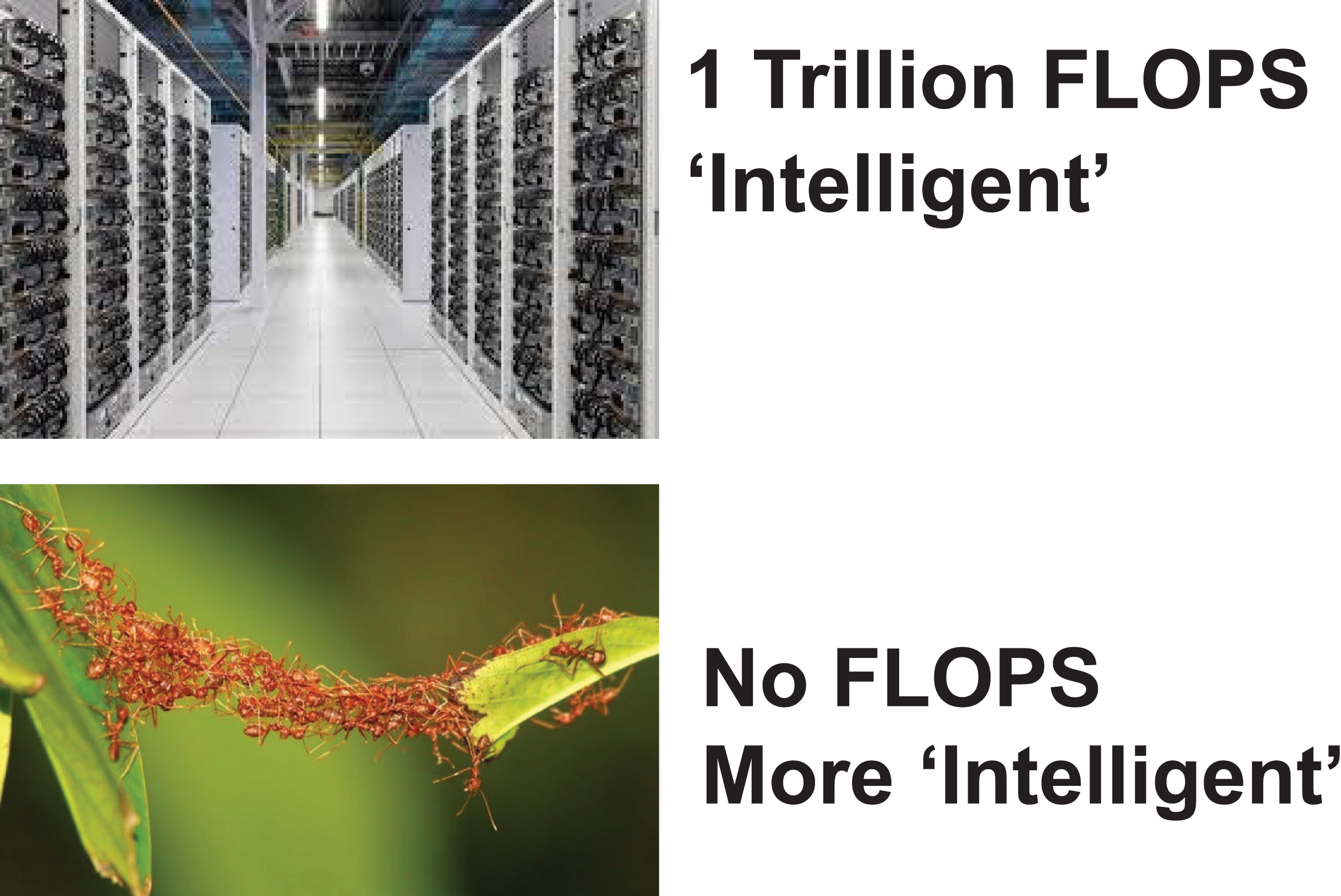

Every year, computers get faster, bigger, and hungrier for power. (Or they become faster, cheaper, and smaller depending on how you look at it.) Regarding larger compute, we measure growth of compute in terms of FLOPS—floating point operations per second. This presumes that intelligence—the capacity to ‘compute’ something—is simply a matter of having enough pure power and brute force.

But, what if this is the wrong way to think about computation?

The way we talk about computing power today is strangely narrow. Take the recent craze in GenAI. The presumed path to progress is stacking more and more GPUs, burning more electricity, and running more operations per second. This has fueled huge breakthroughs (see LLMs and their maniacal use), but it has also created massive blind spots.

As an example, a single bacterium computes how to survive without a supercomputer tied to its flagellum and chemotactic machinery. The human brain, the most system we can conceive of, runs on relatively low energy. OpenAI’s most recent version of ChatGPT (GPT-4o) has, by several estimates, over one trillion parameters. Our brains have ~90B neurons. And do way more than pattern-match the next word in a sentence.

If FLOPs uniquely defined intelligence, none of this would make sense.

What if intelligence isn’t about performing operations, but is about compressing entropy into structure? Systems like brains, cells, and ecosystems don’t just process information. Rather they compress into rules, hierarchies, and constraints. They are able to get away with less because their collective structures know more.

We’ve spent decades chasing faster chips. But perhaps the next frontier of computation need not be about speed and scale at all. It might be about understanding how Nature computes, not through brute force, but through structured sparsity.